What is HLS?

HLS (HTTP Live Streaming) is a live streaming protocol that leverages current widespread HTTP technologies to deliver live video + audio experiences to large audiences.

Initially developed by Apple in 2009, it has gained wide adoption across devices ranging from desktops and mobile phones to smart TVs. The iPhone drove its initial adoption, and having a significant market share in the mobile space, its supporting HLS by default was an excellent booster.

Understanding HLS working

Let’s understand how HLS works on a high level. Looking from a client’s (any web player's) perspective, these are the steps it follows to understand and play media:

- It makes a GET request to a server to fetch a master playlist.

- The client chooses the best bitrate from the list considering the user’s network conditions.

- Next, it makes a GET request to fetch the playlist corresponding to that bitrate.

- It understands the playlist, starts fetching different media segments, and starts playing.

- It repeats the last two mentioned steps in a loop.

This was a rough plan for how clients work with HLS. Now let’s see what these playlists/segments actually are in more detail. To generate this data, I am going to take the help of the swiss-army knife of media-related stuff: ffmpeg

Let’s create a sample video using a simple command:

ffmpeg -f lavfi -i testsrc -t 30 -pix_fmt yuv420p testsrc.mp4

This creates a sample video of 30 seconds in duration.

Now we'll create an HLS playlist from the following command:

ffmpeg -i testsrc.mp4 -c:v copy -c:a copy -f hls -hls_segment_filename data%02d.ts index.m3u8

This will create a playlist in the same dir, with files:

- index.m3u8

- data00.ts

- data01.ts

- data02.ts

Puzzled about what they are? Let’s understand what the command did and what these files mean.

We’ll first break down the command.

We are telling ffmpeg by -i to take MP4 video as input, note this can also take RTMP input directly; one just needs to say something like:

ffmpeg -listen 1 -i rtmp://127.0.0.1:1938/live -c:v copy -c:a copy -f hls -hls_segment_filename data%02d.ts index.m3u8

This is another exciting aspect we will discuss further in this article.

Next, understanding -c:v copy and -c:a copy. This is a way of saying copy audio/video data of encoding, etc., from source input to output as it is. This is where you could do encoding in different formats too. For example, if you want more than one quality in your HLS playlist.

-f tells ffmpeg what our output format will be, which in this case is HLS.

-hls_segment_filename tells ffmpeg what format we want our segment filenames to be in.

And finally, the last argument is the name of our master playlist.

Now, moving on to the files:

Opening the master playlist in an editor, it looks like this:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:10

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:10.000000,

data00.ts

#EXTINF:10.000000,

data01.ts

#EXTINF:10.000000,

data02.ts

#EXT-X-ENDLIST

Let’s understand these tags:

EXTM3U: M3U(MP3 URL or Moving Picture Experts Group Audio Layer 3 Uniform Resource Locator in full) is a multimedia playlist format name, and this line indicates that we are using extended M3U format, according to HLS spec: "It MUST be the first line of every Media Playlist and every Master Playlist."EXT-X-VERSION: This tag indicates the compatibility version of our playlist file, there has been more than one iteration on the playlist format, and there are older clients who do not understand newer versions, so this becomes compulsory to send for any server-generating playlist format version greater than 1. It being present multiple times should lead to clients rejecting the whole playlist.- These were the

Basic Tagsas specified by the spec. - We will now move on to something called

Media Playlist Tags. The first among them isEXT-X-TARGETDURATION. It is a required flag again telling the client what will be the longest segment length it can expect. Its value is in seconds. EXT-X-MEDIA-SEQUENCE: This tag mentions the number of the first media segments. It is unnecessary and, by default, considered 0 when not present. Its only restriction is that it should appear before the first media segment.EXTINF: This tag comes underMedia Segment Tagsand indicates the duration and metadata for each media segment. Its format, according to spec, is:<duration>, [<title>]. Duration is required to be specified in floating point numbers when using any format above version 3. The title is mostly metadata for humans and optional,ffmpegseems to have skipped it by default.- Each following line after

EXTINFspecifies where to find the media segment. In our case, they are in the same folder, so they are directly listed, but they can also be in subfolders like480p, which would mean their name would be:480p/data01.ts. Note thatdata01is also a format given by us in the above command, and one can change it very easily. EXT-X-ENDLIST: I guess the most difficult-to-understand tag? One would definitely not be able to look and tell what it does. Jokes aside, except for indicating that the list has ended, an exciting property is that it can appear anywhere in the playlist file. If manually building these files, you may want to be careful about placing it in the right place.

For keen readers, they may have noticed that no multiple bitrates are listed here. Well, I wanted to explain basic structure first with a simple example.

To understand ABR, let’s take an already hosted URL, something like: https://demo.unified-streaming.com/k8s/features/stable/video/tears-of-steel/tears-of-steel.ism/.m3u8

OTTVerse maintains a nice list of test HLS URLs here.

On downloading the playlist, it looks something like this:

#EXTM3U

#EXT-X-VERSION:1

## Created with Unified Streaming Platform (version=1.11.20-26889)

# variants

#EXT-X-STREAM-INF:BANDWIDTH=493000,CODECS="mp4a.40.2,avc1.66.30",RESOLUTION=224x100,FRAME-RATE=24

tears-of-steel-audio_eng=64008-video_eng=401000.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=932000,CODECS="mp4a.40.2,avc1.66.30",RESOLUTION=448x200,FRAME-RATE=24

tears-of-steel-audio_eng=128002-video_eng=751000.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1197000,CODECS="mp4a.40.2,avc1.77.31",RESOLUTION=784x350,FRAME-RATE=24

tears-of-steel-audio_eng=128002-video_eng=1001000.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1727000,CODECS="mp4a.40.2,avc1.100.40",RESOLUTION=1680x750,FRAME-RATE=24,VIDEO-RANGE=SDR

tears-of-steel-audio_eng=128002-video_eng=1501000.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2468000,CODECS="mp4a.40.2,avc1.100.40",RESOLUTION=1680x750,FRAME-RATE=24,VIDEO-RANGE=SDR

tears-of-steel-audio_eng=128002-video_eng=2200000.m3u8

# variants

#EXT-X-STREAM-INF:BANDWIDTH=68000,CODECS="mp4a.40.2"

tears-of-steel-audio_eng=64008.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=136000,CODECS="mp4a.40.2"

tears-of-steel-audio_eng=128002.m3u8

Over here, we see a new tag EXT-X-STREAM-INF. This tag gives more info about all the variants available for a given stream. The tag is to be followed by an arbitrary number of attributes.

BANDWIDTH: It is a compulsory attribute whose value tells us how many bits per second will be sent with this variant.CODECS: It is another mandatory attribute specifying our codecs for video and audio, respectively.RESOLUTION: It is an optional attribute mentioning the variant’s pixel resolution.VIDEO-RANGE: We can ignore this tag cause it is not specified in spec, though at the time of writing this article, there’s an open issue on hls.js to add support for it.SUBTITLES: It is an optional attribute specified as a quoted string. It must match the value of theGROUP-IDattribute on aEXT-X-MEDIAtag. This tag should also haveSUBTITLESas itsTYPEattribute.CLOSED-CAPTIONS: It is an optional attribute, mostly the same asSUBTITLES. Two things that differentiate it are:- Its attribute value can be a quoted string or

NONE. - Tag value on

EXT-X-MEDIAfor closed captions should beCLOSED-CAPTIONS.

- Its attribute value can be a quoted string or

The line following these stream-inf tags specifies where to find the corresponding variant stream, a playlist similar to what we saw earlier.

RTMP ingestion

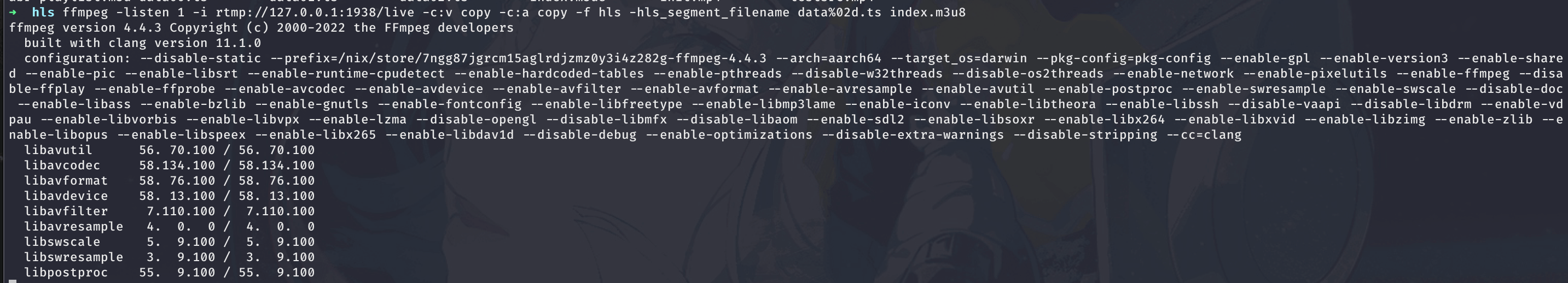

Earlier, we saw an RTMP input ffmpeg command. It helped us understand another property of live HLS playlists. Revisiting the command, it looked like this:

ffmpeg -listen 1 -i rtmp://127.0.0.1:1938/live -c:v copy -c:a copy -f hls -hls_segment_filename data%02d.ts index.m3u8

The command should give an output similar to what we have below, which seems stuck.

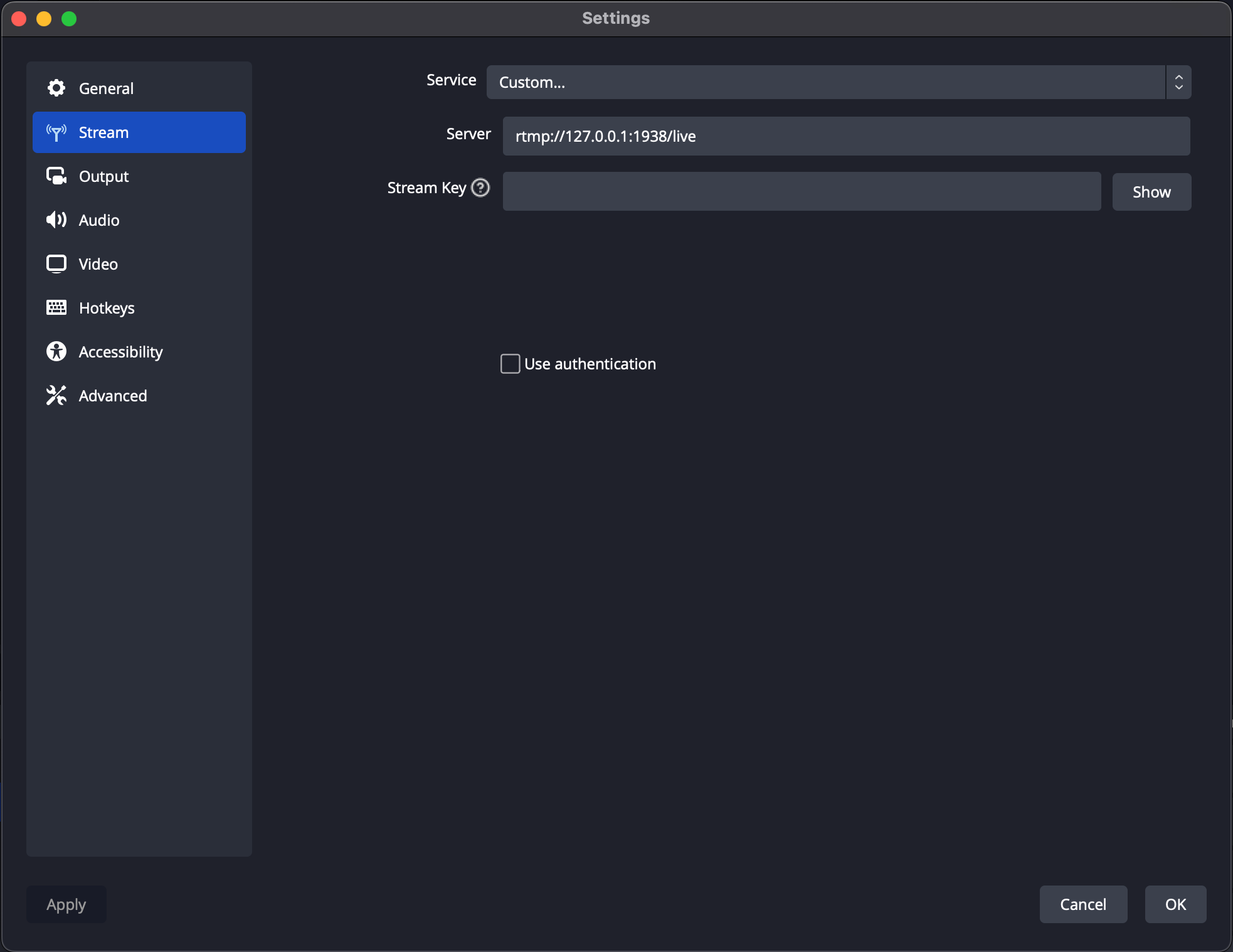

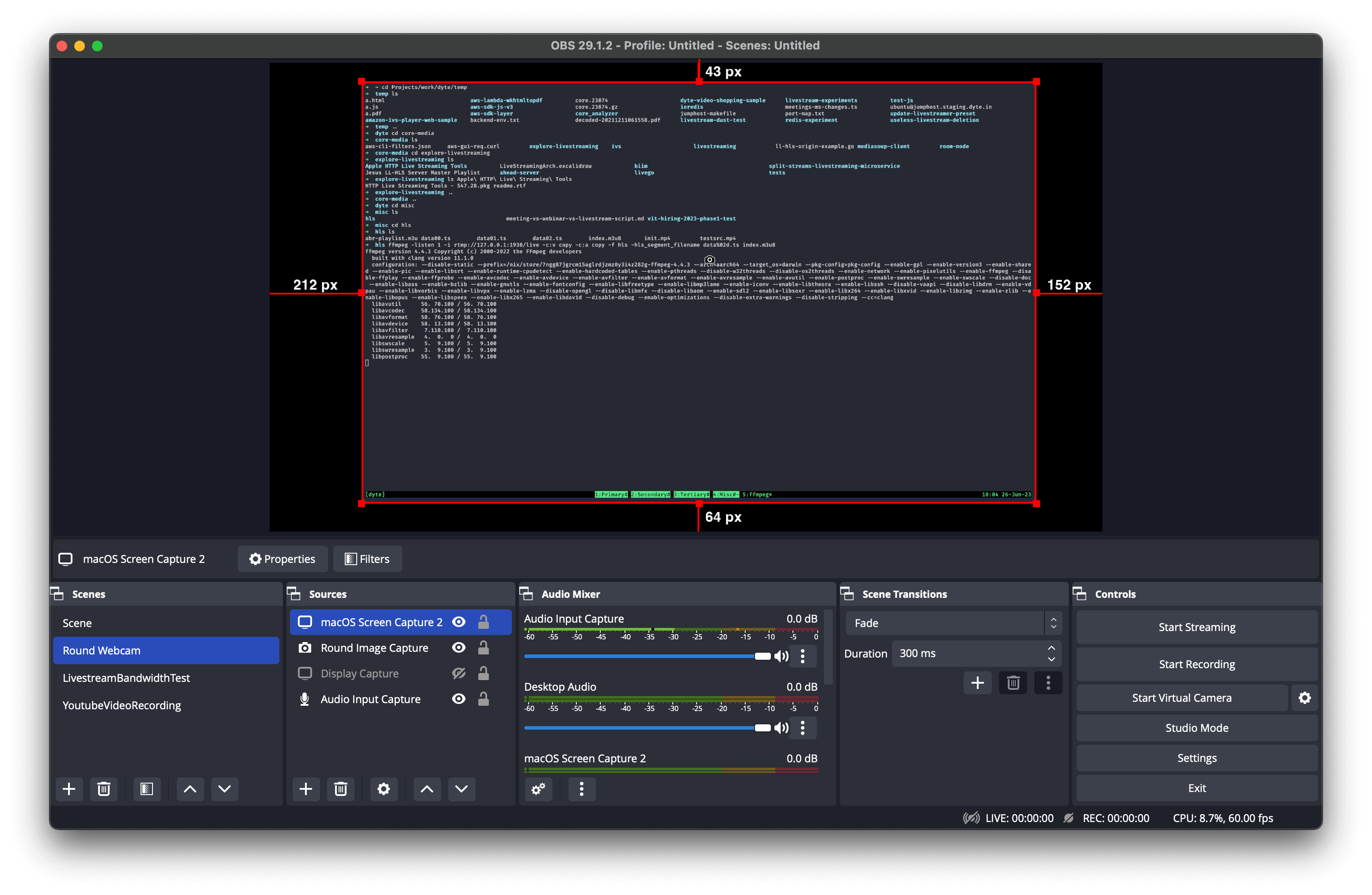

Now, let’s satisfy your dream of becoming a streamer! One can use any RTMP source, even ffmpeg, but in our demo, we will use OBS to send data to our ffmpeg server listening for RTMP input. I have created a simple screen share capture input in it and then navigated to Stream section in Settings, here you will see a screen similar to this:

Let’s fill in our Service as Custom and add the Server rtmp://12.0.0.1:1938/live with an empty Stream Key. Now click on apply and press OK.

We will land at the default view of OBS here:

When we click on Start Streaming, we will stream to our local RTMP-to-HLS server. You will notice the folder in which you ran the ffmpeg command. There are new files with names:

- index.m3u8

- data files with template

data{0-9}{0-9}.ts

Let’s check index.m3u8 in one instant, I captured this as:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:4

#EXT-X-MEDIA-SEQUENCE:3

#EXTINF:4.167000,

data03.ts

#EXTINF:4.167000,

data04.ts

#EXTINF:4.166000,

data05.ts

#EXTINF:4.167000,

data06.ts

#EXTINF:4.167000,

data07.ts

Notice how there’s no end playlist tag? That’s because this is a live playlist that keeps on changing over time with new segments. For example, after some time, my local playlist looks something like this:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:4

#EXT-X-MEDIA-SEQUENCE:9

#EXTINF:4.167000,

data09.ts

#EXTINF:4.167000,

data10.ts

#EXTINF:4.166000,

data11.ts

#EXTINF:4.167000,

data12.ts

#EXTINF:0.640667,

data13.ts

#EXT-X-ENDLIST

Old segments are replaced with new segments to load. This helps one deliver the latest data when live streaming an event.

This covers playlists to a reasonable extent. Now let’s talk about segment files a bit.

Container formats

Media segments use container formats to store actual encoded video/audio data. Discussing container formats in detail would be out of the scope of this particular article. A good starting point to know more about it might be this.

HLS initially only supported MPEG-2 TS containers. This decision was different from container formats used by other HTTP-based protocols. For example, DASH has always used fMP4 (fragmented MP4). Though finally, Apple announced support for fMP4 in HLS, and now it is officially supported by spec.

Media segment size

The media segment size is not defined. People usually started with ~10 seconds segment size, but another part of the spec caused this number to change. According to the spec, one must fetch and ready at least three segments to start playing. This would mean for 10 seconds segments, it would require 30 seconds of buffer to be loaded before the client could begin playing. One can change it as and when one wants, and this is a common tuning parameter one employs while reducing latency.

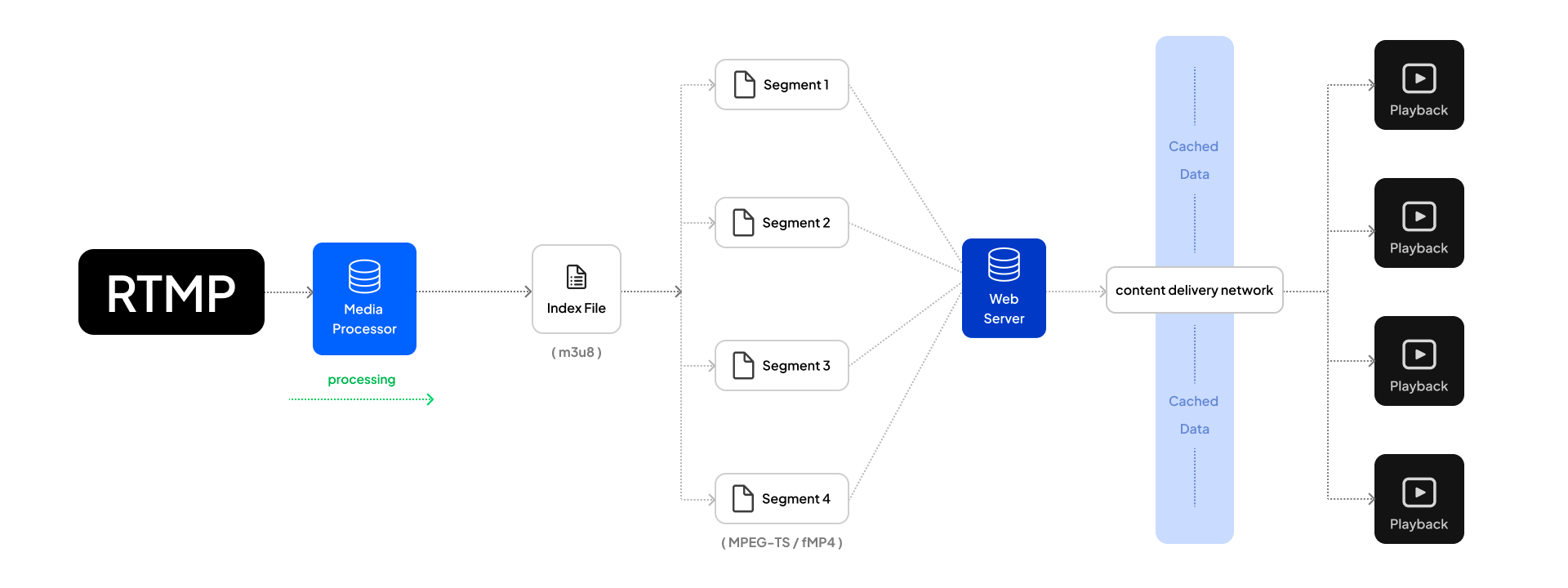

Component-wise breakdown

- Ingest: Ingest (relatively less relevant) marks the point where our system takes input from the user, this can be done in various formats with different protocols, but platforms like Twitch and Youtube have popularized RTMP. However, this seems to be changing with the introduction of WebRTC in OBS, making it possible to use WHIP to ingest content much faster at lower latencies.

- Processing: Media Processing includes transcoding, transmuxing, etc., depending on the ingest format used. Input here is converted into MPEG-TS or fMP4 segments indexed in the playlist and made ready to be consumed.

- Delivery: Delivery is an essential aspect of ensuring HLS is scalable. A web server hosts the playlists and segments, which are then sent to the end user through a CDN. A CDN caches these GET requests and scales itself to return static data on its side, making protocol highly scalable when asked for it at much higher traffic times.

- Playback: A smooth playback is vital for a pleasant user-end experience. ABR must be adequately implemented on the player side so that the user experiences minimal experience dips during the stream.

Features provided by HLS

- Distributes over common format HTTP making it easily reachable everywhere

- Using HTTP, HLS can leverage advantages from underlying TCP implementation, making retransmissions handled out of the box.

- Much of the internet relies on HTTP, making it automatically deliverable on all consumer devices.

- This becomes challenging for UDP-based protocols like RTP, which sometimes need stuff like NAT traversals to work properly.

- Easy CDN-level caching

- Scaling HLS becomes super easy because caching HTTP is widely supported, and offloading it to good CDNs can reduce the load on the source server to a minimum while ensuring delivering the same audio + video data to all the consumers.

- Adaptive Bitrate Streaming (ABR)

- HLS supports ABR, so users with a poor internet connection can quickly shift to lower bitrates and continue enjoying the stream.

- Inbuilt ad insertion support

- HLS provides easy-to-use tags to insert ads during a stream dynamically.

- Closed captions

- It also supports embedded closed captions and even DRM content.

- Using fMP4

- Using fragmented MP4 helps reduce encoding and delivery costs and increases compatibility with MPEG-DASH.

Disadvantages of HLS

- HLS is widely known for its painstakingly higher latencies. One of the simple ways to make it slightly better is by fine-tuning segment size and then changing the ingest protocol to lower latency protocols like RTMP. But still, for crisp live experiences, vanilla HLS is not enough. The computing world has offered community LL-HLS and Apple LL-HLS as the solutions. Both have their way of approaching the problem and can promise some nice latencies.

- HLS spec specifies that the client needs to at least load three segments before starting. So now, imagine you set the segment size as 10 seconds. You already see 30 seconds of latency in the stream from the start. This also makes tuning segment size very important.

Final Thoughts

Having open standards helps adoption and growth while making it much easier to interop between open and closed software/hardware stacks. HLS drives the same in live streaming space — it is widely adopted, simple, yet robust. It did not try to reinvent the wheel from scratch, instead embraced current widely accepted protocols and built on top of it.

If this article tickled the builder or the hacker in you, then check out Dyte’s live streaming SDK. We provide easy-to-use SDKs to deliver interactive live streams at scale with much lower latency than traditional HLS. Feel at home with the same friendly developer experience and extensibility.

Get better insights on leveraging Dyte’s technology and discover how it can revolutionize your app’s communication capabilities with its SDKs.