The last 18 months have brought about a lot of change in the world, none more than our ability to meet and interact with each other. In these times of change, live video has emerged as the closest proxy to real-life interactions in building trust and connection and has found significant adoption across use cases such as live classes, telehealth, fitness and wellness, social meetups, conferences, exhibitions, remote work, dating, B2B sales and customer support among others. It has fundamentally changed the paradigm of multiple sectors like education and healthcare while giving birth to new sectors like remote work and we believe this is only the tip of the iceberg.

When deciding to offer live video, every developer has 2 options:

- Conduct video calls using platforms like Zoom, Google Meet, Microsoft Teams. These platforms are highly reliable, allow you to get started quickly with minimal effort, and give users the standard live video calling experience they are increasingly getting accustomed to.

- Build your own stack on top of a live video SDK. Currently, this takes more time than the first approach but allows developers a great deal of freedom to build a more customized and engaging experience for their users.

Today, we will be taking a deeper look at the latter. We have listed below a few popular approaches that are currently used :

1. The 'DIY' approach

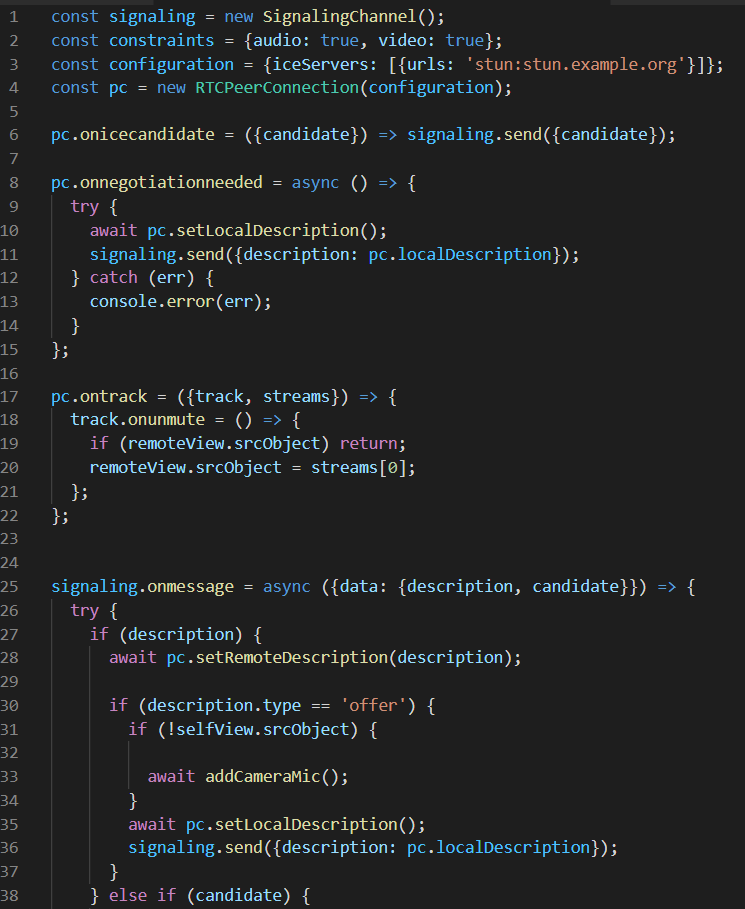

In this approach, the goal is to have your engineering team build the entire audio/video infrastructure from scratch. This usually involves setting up a PoC for a live video call, then modifying it to fit your use case, and finally scaling it to reach your target customers/audience. In 2021, you would implement this using WebRTC - a communication protocol that lets you add real-time communication capabilities to your applications, and send voice, video, and generic data between peers.

Using this gives you full control over your infrastructure, and the power to customize how your live video experience looks, and how exactly it should function. But, with great power comes great responsibility (yup, we all love Spider-man!). Here are a few concerns if you choose to go with this approach:

Although WebRTC has been around for a few years now, it still has to cover some ground in terms of stability, due to which there are several edge cases where it breaks, and it requires a lot of data to predict what these edge cases are.

- For instance, Safari does not support the VP9 encoding, and iOS doesn't even support WebRTC prior to version 12. Also, mobiles have much lesser battery capacity, hence the media streams must be sent at a much lower bitrate so that the device does not get discharged fast.

- To gather data about where your implementation is breaking, you would need to have a lot of users, but would you really want your users to use a product with a lot of missing edge cases?

Developing live video is a difficult challenge, and so is scaling it. For a young startup, resources are limited and there are multiple engineering issues to take care of. Building your own video stack would need you to devote a considerable portion of your resources in building, maintaining, and scaling while also building out the non-video parts of the platform which are core to the business model. A lot of new issues spring up when your implementation is used by a large user base.

- One of the major challenges that the DevOps team would face is that there is a delay between triggering a scale-up, and the new instances actually being provisioned on the cloud provider. So, you would need to recognize patterns in usage and scale up beforehand to handle spikes of a large number of people joining a call in a small duration.

- Another related challenge is to perform load testing effectively. The infrastructure required to load-test realistically is much more complex than the video servers themselves. Some services exist for this exact purpose, but companies generally end up making their own.

- For collecting client-side metrics, you'll probably find that it is a hassle to integrate a third-party service for data collection and end up making your own library to collect client-side metrics and feed them to a self-hosted ingestion server.

- There are too many factors on which the performance of your system in production heavily depends - Operating System versions, browser versions, network conditions (internet speed, packet loss, jitter, etc.), and even the geographical distance from the server.

A number of open-source projects also exist, which give developers a great head start if they're looking to build their own infrastructure - the most popular of these include Jitsi, Mediasoup, Janus, and Pion. These projects provide a layer of abstraction and expose a number of helper functions to perform various tasks, such as creating transports, etc. They have helpful guides on how to get started, but you would still face the aforementioned issues regarding scaling, resources, etc.

2. The 'Zoom' approach

Everybody loves Zoom, and for good reason too. It is reliable and there is an SDK to integrate Zoom directly into your apps and it is one of the best options you have if your primary focus is mobile applications, and you do not require a lot of personalization in your application.

However, wonderful as it may sound, there are a few things you might want to note before you go ahead with it:

- The Zoom SDK does not allow you to customize the UI of your video call. This might be a deal-breaker for some products that really insist on providing a superior user experience and having a consistent design throughout their application.

- Zoom does not use WebRTC currently. They have built their own ultra low latency live video tech, which helps them get the amazing performance in their native desktop app but also causes it to not work very well on browsers.

- There is very limited post-processing that you can do since you do not receive the raw audio-video streams. Most ML pipelines are compliant with WebRTC, which you, unfortunately, can't use if you're using the Zoom SDK.

We all have used Zoom video conferencing, but let's be honest - Zoom fatigue is real. So, in case you are looking - here's a handy list of video conferencing platforms that are great Zoom alternatives.

3. The 'WebRTC ++' approach

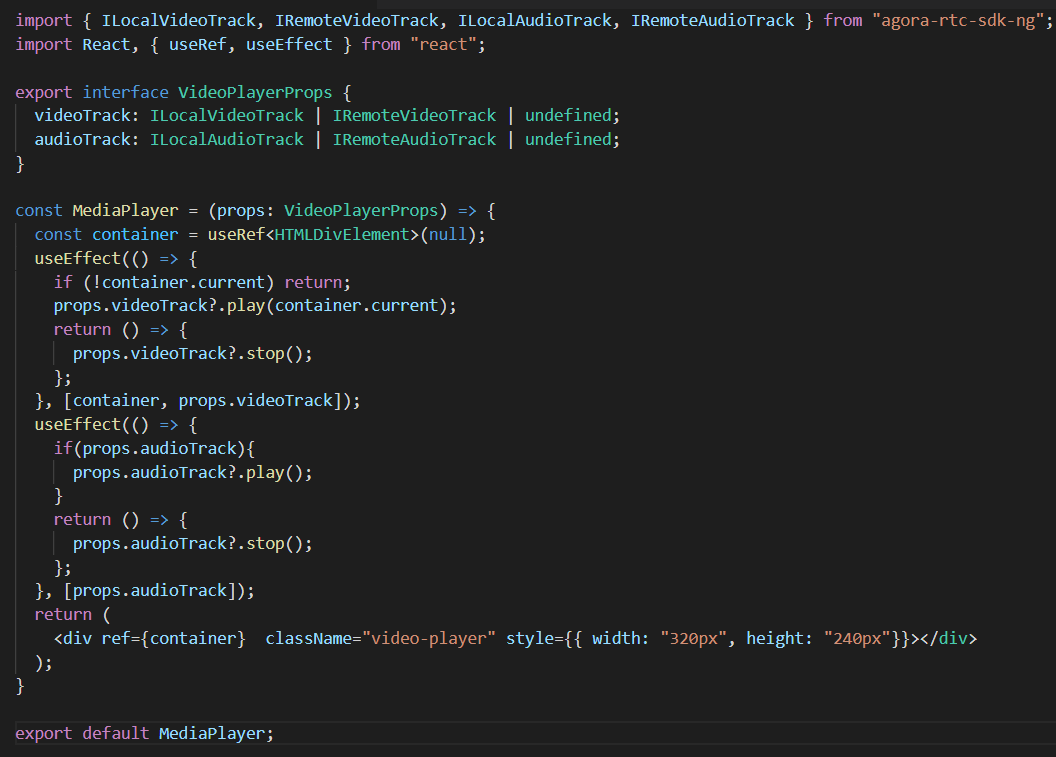

If you want to retain control over your live video experience but do not have the bandwidth or expertise to build the entire video infrastructure stack from scratch, then this is the approach to go with. The most popular SDKs in this category are Agora and Twilio. They act as a "layer" between your code and raw WebRTC and focus on delivering the best experience with a "bare-bones" implementation. These SDKs allow you to customize your experience to the deepest level, without having you go insane thinking about all the things that could possibly go wrong in your WebRTC implementation.

This seems like the best of both worlds, you get a decently reliable system with a lot of customizability. However, there are some things you should consider:

- The use cases for live video have evolved, and it is evident now that most companies want a really good out-of-the-box experience instead of having to spend resources in writing a lot of lines of code. But Agora and Twilio largely give you the "bare-bones" experience so that you can customize it as much as you want; which is why their SDKs are a thin layer over WebRTC. So, you might face issues again in handling those edge cases with different browsers, and different devices, which they would not handle on their layer.

- Customizability is costly in terms of effort. There are a vast range of APIs and a set of SDKs that the developer must familiarize themselves with so that they can meet the requirements. For instance, if you wanted a video call to shift to an audio-only experience on mobile phones, it is likely that you would have to write the switching logic for that, and maybe even use multiple APIs by the same provider.

- Of course, Agora and Twilio provide detailed documentation on how to handle most of these cases, but it is not the most user-friendly and the support is not instantaneous. All of this implies a significant amount of developer time and effort building something that is not their core product.

Enter Dyte

As we spoke to multiple teams across use cases, we realized that today developers have to choose between the speed of getting started (use Zoom SDK) and retaining the freedom to customize the live video offering for their users (use webRTC or Agora, or Twilio). We wondered if and how can we bring together faster deployment and greater customizability under the same hood and thus we started Dyte. Our three key pillars are:

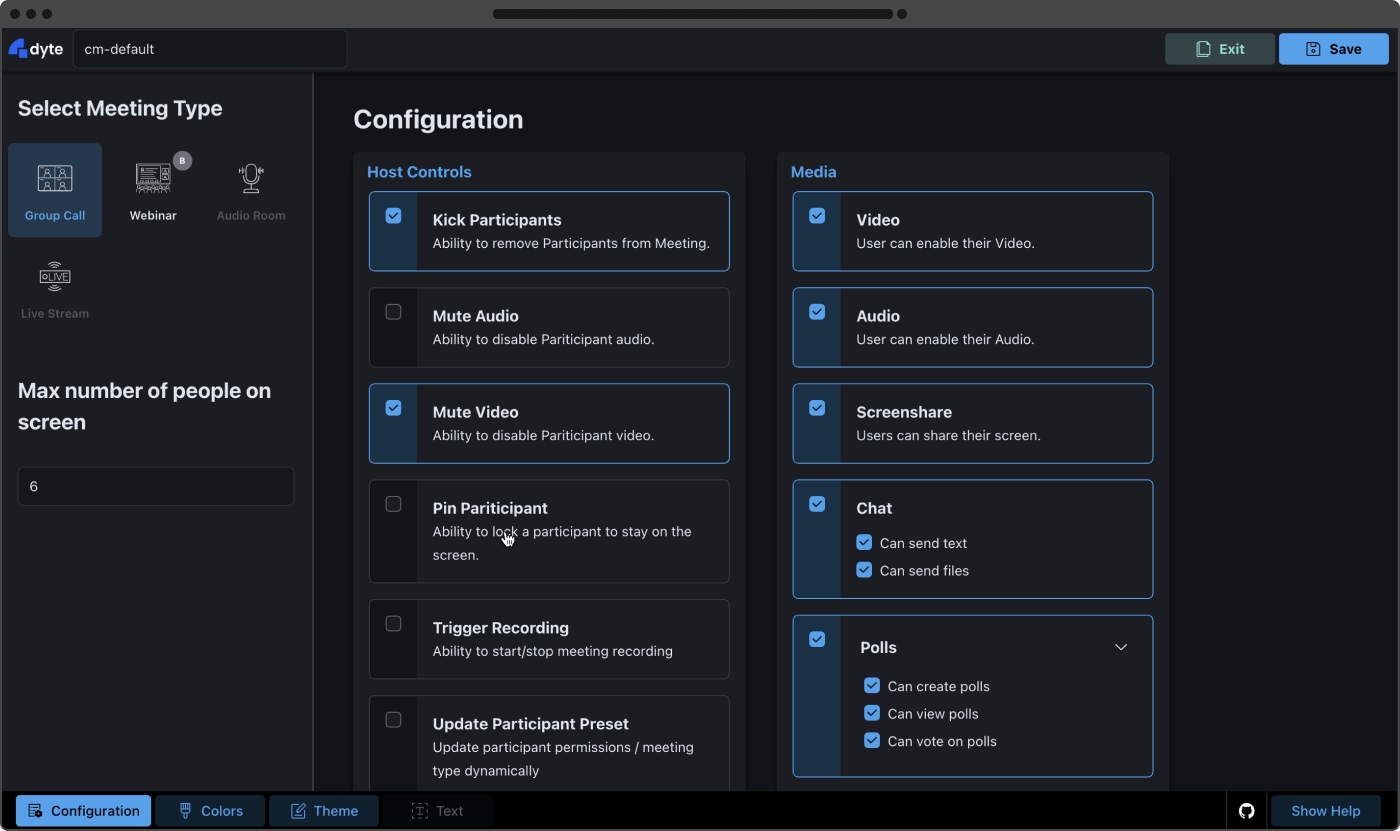

- Less code to get you started faster: We provide an SDK that not only lets you integrate within your product with minimal effort but also lets you customize the complete look and feel with the same level of ease! (in fact, we have had clients go live on production in a matter of hours after they started integrating Dyte). Our SDK allows you to set permissions for users, and customize the UI to fit your design, while we take care of your video call behind the scenes. You can change your call into an audio-only setting, a live stream, or even a webinar, with just a few configuration changes on our developer portal. And it internally handles edge cases - for example, it dynamically changes the incoming video quality based on the network conditions on the user's device.

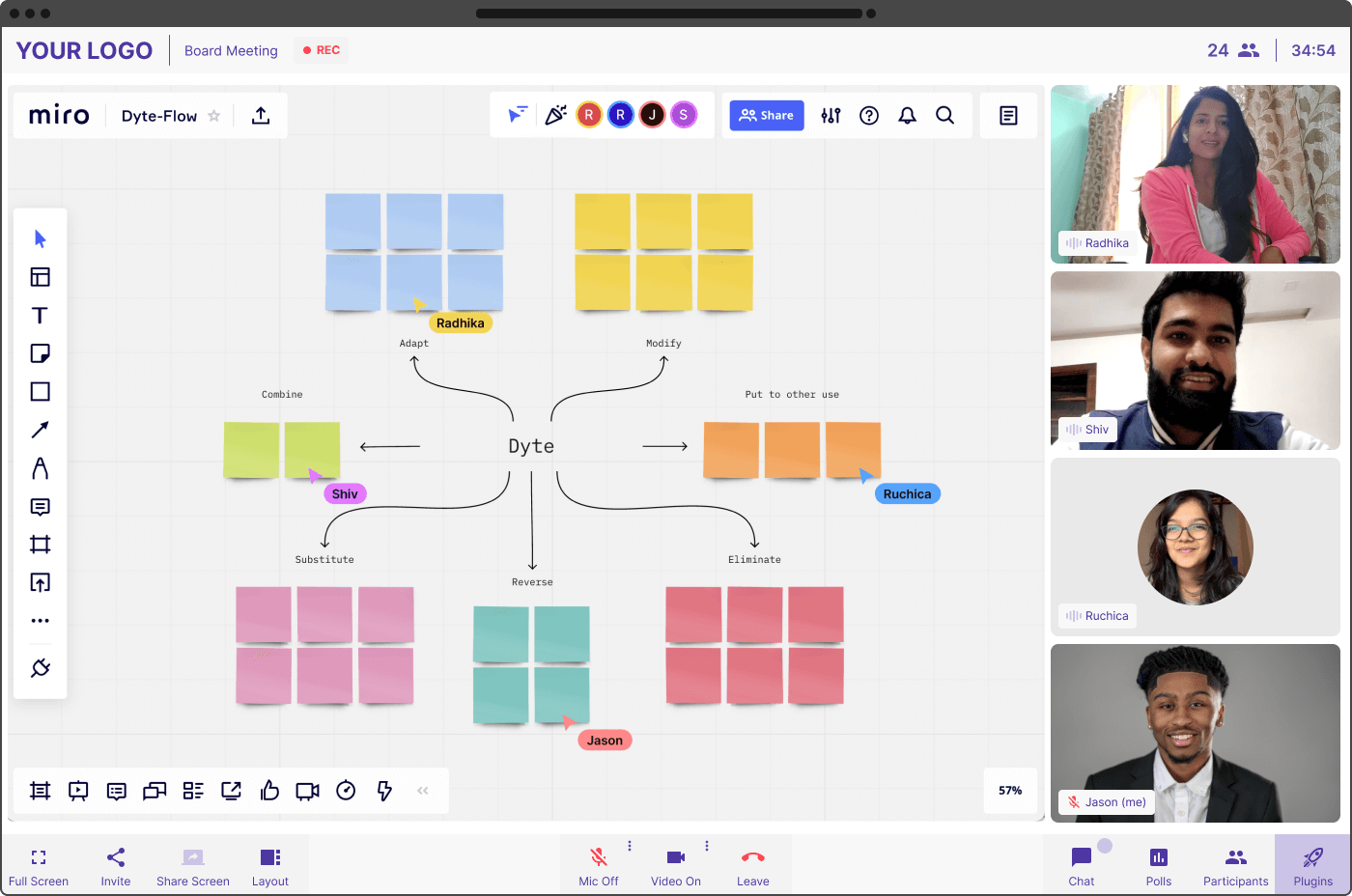

- Plugins to make live video fun: The whole idea behind integrating live audio/video within products is to make the interactions more human, more real-time, and more immersive. The experience of collaborating over a call with screenshare just does not cut it anymore and to make such experiences more immersive and collaborative, we provide Plugins! With plugins, you can add your own web apps within a call or use pre-built options such as whiteboarding, YouTube, remote browser, etc. You will also be provided with pipelines to access raw media streams and do some processing over them, like in the background change module. We are always excited to see innovative solutions - that's why we provide an SDK to let you build custom plugins, thus limiting you only by your own imagination.

- High observability with instant support: One of our key goals is to build highly developer-friendly and observable live video SDKs. This is achieved by having detailed metrics collected throughout the duration of the call which not only give you an insight into the engineering stats but also can be used to gain business insights, such as measuring attentiveness, or the different levels and methods of interaction by the users. Also, being developers ourselves, we understand that many a time we need help, and thus, we want to be able to provide the same, swift support that we would love to have, to our customers. You'll always have a dedicated support channel to help you with any issues you might face with the integration or debugging. We're just a ping away!

Live video is shaping the way the world interacts and we at Dyte are doing our bit to make it more seamless, engaging, and fun. So, if you are building a product that requires the use of live video/audio, do check us out and get started here on your 10,000 free minutes which renew every month. If you have any questions, you can reach us at support@dyte.io or ask our developer community.