Interactive Connectivity Establishment (ICE) is one of the pillars upon which WebRTC is founded; without ICE, there would be no WebRTC. It’s an interesting framework that enables seamless network traversal without an explicit understanding of the underlying topology or architecture. In this post, we'll delve into ICE and discover how it works for our underlying media layer.

🗺️ A little background

Typically, protocols used for establishing session connectivity between peers involve the exchange of IP addresses and ports. This, however, causes problems when dealing with networks behind Network Address Translators (NATs), since NATs obscure source addresses behind their own and maintain an internal mapping table.

Over the years, many solutions to this problem have been proposed, including Application Layer Gateways (ALGs) and the original STUN implementation (which has been obsoleted).

Unfortunately, all these solutions perform better in some network topologies and worse in others, which leads to assumptions made about the architecture of the system where these solutions will be deployed. This is a potential source of fragility and complexity, which are undesirable.

🧊 ICE exists for a reason. Here's why:

A typical WebRTC scenario involves (at least) two peers (or agents) hoping to communicate. These agents are initially unaware of their own network topologies. Our agents in particular, may or may not be behind a single or multiple tiers of NATs. ICE allows agents to learn enough about their topologies to find one or more paths through which they can connect.

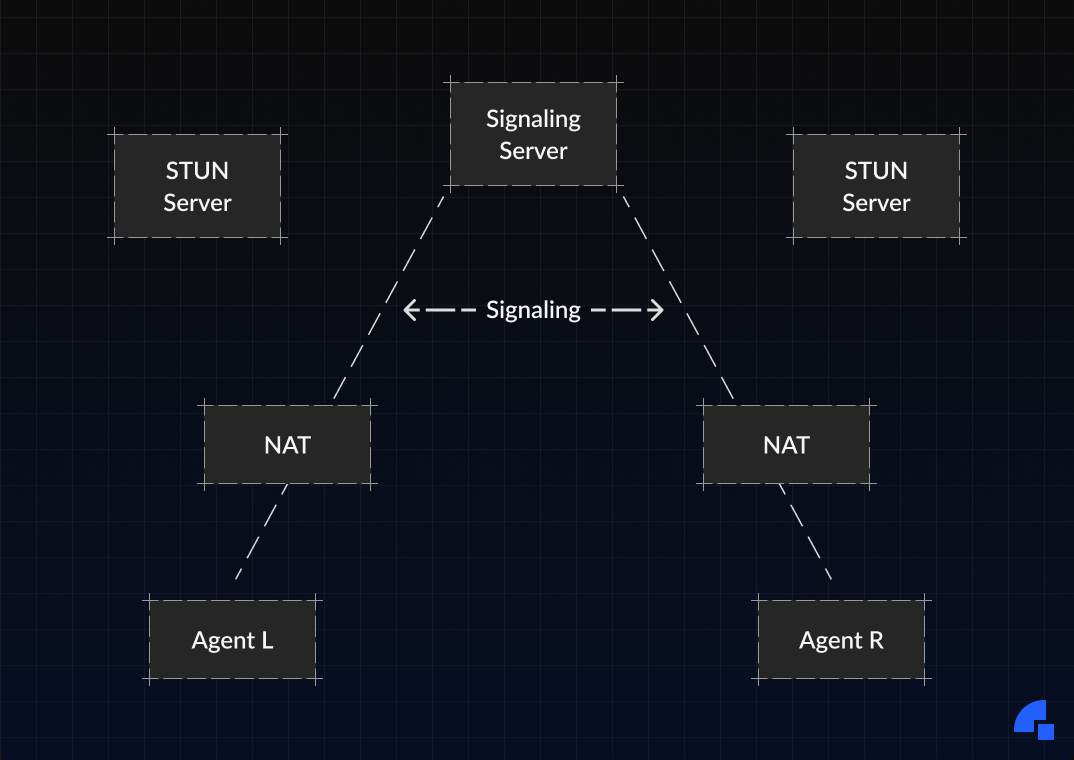

⚙️ A typical deployment scenario

The above figure describes a typical scenario in an ICE deployment. Each agent has a number of candidate transport addresses (a combination of IP address and port for a particular transport protocol), which can be used for communication with the other agent. These can include:

- A transport address on a directly attached network interface

- A translated transport address on the public side of a NAT (a"server-reflexive" address)

- A transport address allocated from a TURN server (a "relayed address")

Theoretically, any of L’s candidate transport addresses can be used to communicate with any of R’s candidate addresses. In practice, however, many combinations won’t work; eg: if both L and R are behind NATs, their directly attached network interfaces will not be able to communicate directly. The purpose of ICE is to discover which pairs will work; this is achieved by systematically trying every pair (in a carefully sorted order) until we find one or more that work.

🧩 Bringing all the candidates together

As mentioned earlier, there are different types of candidates; some are derived from network interfaces, and others are discoverable via STUN and TURN.

Host candidates

The first category of candidates are those with a transport address obtained directly from a local interface, such as Ethernet or Wi-Fi, or it could be obtained through a tunnel mechanism, such as VPNs. In all cases, such a network interface appears to the agent as a local interface from which ports (and thus candidates) can be allocated.

Server-reflexive candidates

These candidates are discovered via STUN with a translated address on the public side of a NAT. If TURN servers are not used, this is the most common type of candidate and the only type besides host candidates.

Relayed candidates

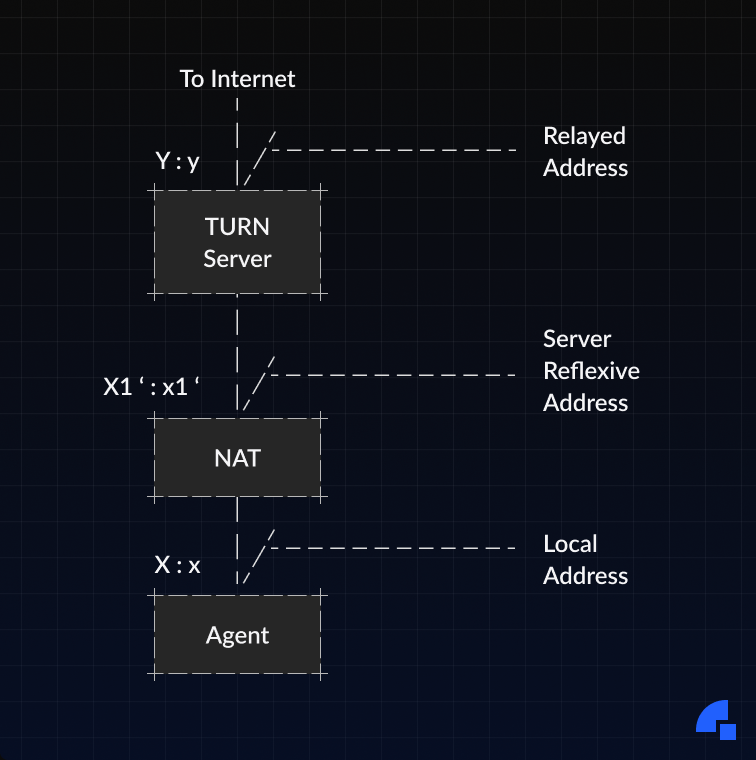

If TURN servers are utilized (as they should), candidates using relayed addresses are made available. The relationship all candidates share with each other is displayed in the figure below.

- When only STUN servers are utilized, the agent sends a STUN Binding request to its STUN server. The STUN server will inform the agent of the server-reflexive candidate X1':x1' by copying the source transport address of the Binding request into the Binding response.

- When the agent sends a TURN Allocate request from the IP address and port X:x, the NAT creates a binding X1':x1', mapping this server-reflexive candidate to the host candidate X:x. Outgoing packets sent from the host candidate will be translated by the NAT to the server-reflexive candidate. Incoming packets sent to the server-reflexive candidate will be translated by the NAT to the host candidate and forwarded to the agent.

- When there are multiple NATs between the agent and the TURN server, the TURN request will create a binding on each NAT, but only the outermost server-reflexive candidate (the one nearest the TURN server) will be discovered by the agent. If the agent is not behind a NAT, then the base candidate will be the same as the server-reflexive candidate, and the server-reflexive candidate is redundant and will be ignored.

📡 Connectivity checks

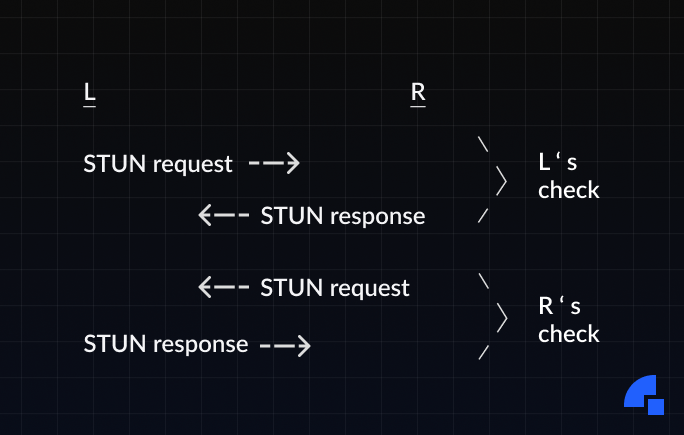

When L has gathered all its candidates, it orders them by highest-to-lowest priority and sends them to R over the signaling server. R does the same thing and sends its candidates to L. These candidates are paired up, and connectivity checks are performed on each pair in priority order. The whole process can be summarized as follows:

- Sort the candidate pairs in priority order.

- Perform connectivity checks on each candidate pair in priority order.

- Acknowledge checks received from the other agent.

Due to both agents performing connectivity checks, this process forms a four-way handshake as illustrated:

Because a STUN Binding request is used for the connectivity check, the STUN Binding response will contain the agent's translated transport address on the public side of any NATs between the agent and its peer. If this transport address is different from that of other candidates the agent already learned, it represents a new candidate (peer-reflexive candidate), which then gets tested by ICE just the same as any other candidate.

Priority ordering

We mentioned a priority order in which the candidates are sorted before connectivity checks, but how is this order decided?

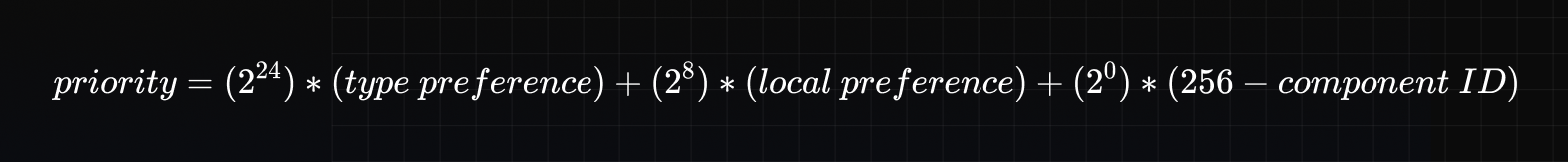

The RFC (8445) recommends a formula that is represented as the following:

This formula combines a preference for the candidate type (server reflexive, peer reflexive, relayed, and host), a preference for the IP address for which the candidate was obtained (local preference), and a component ID.

The type preference must be an integer from 0 (lowest preference) to 126 (highest preference) inclusive, must be identical for all candidates of the same type, and must be different for candidates of different types.

The local preference must be an integer from 0 (lowest preference) to 65535 (highest preference) inclusive. When there is only a single IP address, this value should be set to 65535.

The recommended values for type preferences are 126 for host candidates, 110 for peer-reflexive candidates, 100 for server-reflexive candidates, and 0 for relayed candidates.

The component ID must be an integer between 1 and 256 inclusive.

🚀 ICE lite

Certain ICE agents are always directly connected to the public internet and have a public IP address that can receive packets from any other agent. A special "lite" version of ICE may be used to simplify ICE support for these devices. These agents are frequently used on SFUs, including Dyte's!

Lite agents only use host candidates and do not perform connectivity checks, though they do respond to them.

Because lite implementations only use host candidates, each IP address will have zero or one candidate, regardless of the IP address family.

Congratulations if you're still here 😊

You now have a basic understanding of how ICE works under the hood and are ready to fearlessly connect to peers around the world!

To learn more about ICE, check out the RFC and WebRTC for the curious.

Try Dyte if you don't want to deal with the hassle of managing your own peer-to-peer connections!

If you haven't heard of Dyte yet, go to https://dyte.io to learn how our SDKs and libraries revolutionize the live video and voice-calling experience. Don't just take our word for it; try it for yourself! Dyte offers free 10,000 minutes every month to get you started quickly.

If you have any questions or simply want to chat with us, please contact us through support or visit our developer community forum. Looking forward to it!